Optimizing Intersecting Geometry#

In this tutorial, we demonstrate how our method optimizes geometry effectively, even when intersections are present. Note that there is no difference from the previous tutorial, except for the scene setup. Intersections are correctly handled without any additional effort.

Imports#

[1]:

import drtk

import numpy as np

import torch as th

import torch.nn.functional as thf

from IPython.display import display

from PIL import Image

from torchvision.utils import save_image

I1001 205506.067 _utils_internal.py:314] NCCL_DEBUG env var is set to None

I1001 205506.069 _utils_internal.py:323] NCCL_DEBUG is INFO from /etc/nccl.conf

Triangle Scene#

In this example, we will set up a scene with multiple triangles. The scene includes three separate shapes: two individual triangles and one rectangle composed of two triangles sharing an edge. To distinguish these shapes, we will assign distinct colors to each of them.

[2]:

# Target vertex position

v_target = np.asarray(

[

[48.76, 151.9, 100],

[443.9, 36.69, 100],

[287.9, 480.1, 100],

[285.0, 118.0, 50],

[453.0, 118.0, 50],

[285.0, 271.0, 50],

[453.0, 271.0, 50],

[53.53, 388.2, 91],

[215.7, 212.4, 91],

[383.0, 480.9, 120],

],

dtype=np.float32,

)

# Initial vertex positions

v = np.asarray(

[

[12.08, 31.02, 100],

[455.8, 71.94, 100],

[168.9, 540.1, 100],

[260.0, 110.0, 80],

[478.0, 110.0, 80],

[260.0, 235.0, 80],

[478, 235.0, 80],

[75.85, 386.0, 95],

[215.1, 226.1, 95],

[378.8, 481.1, 280],

],

dtype=np.float32,

)

vi = np.asarray(

[

[0, 1, 2],

[3, 4, 5],

[6, 4, 5],

[7, 8, 9],

],

dtype=np.int32,

)

ci = np.asarray(

[

[0.8, 0.8, 0.8],

[0.8, 0.2, 0.1],

[0.8, 0.2, 0.1],

[0.2, 0.2, 0.8],

],

dtype=np.float32,

)

width = 512

height = 512

vt = np.zeros((v.shape[0], 2), dtype=np.float32)

vti = vi.copy()

vt = th.as_tensor(vt, dtype=th.float32)[None].cuda()

vi = th.as_tensor(vi, dtype=th.int32).cuda()

vti = th.as_tensor(vti, dtype=th.int32).cuda()

ci = th.as_tensor(ci, dtype=th.float32).cuda()

v_target = th.as_tensor(v_target)[None,].cuda()

v = th.as_tensor(v)[None,].cuda()

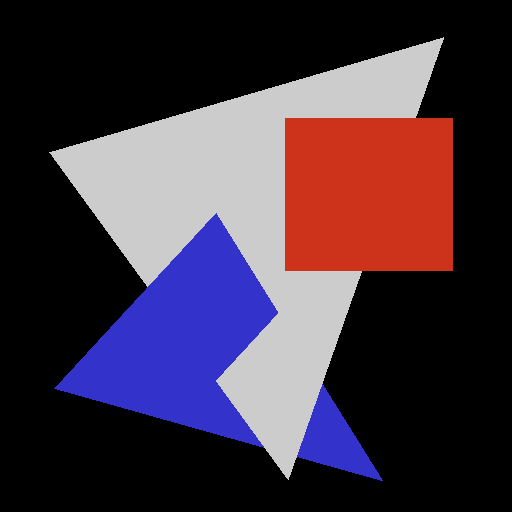

Next, we render the target image

[3]:

# Render target image

index_img = drtk.rasterize(v_target, vi, height, width)

_, bary_img = drtk.render(v_target, vi, index_img)

image_target = (index_img != -1)[:, None] * ci[index_img].permute(0, 3, 1, 2)

save_image(image_target, "img.png")

display(Image.open("img.png"))

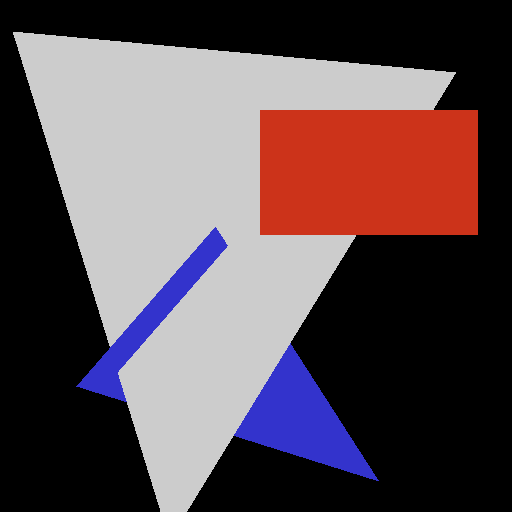

Netx, we render the scene in the at initialization

[4]:

# Render initial scene

index_img = drtk.rasterize(v, vi, height, width)

_, bary_img = drtk.render(v, vi, index_img)

image = (index_img != -1)[:, None] * ci[index_img].permute(0, 3, 1, 2)

save_image(image, "img.png")

display(Image.open("img.png"))

Next, we will optimize vertex position in order to match the target image. We will also visualize the gradients.

[5]:

import av

import imageio

import IPython.display

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image, ImageDraw, ImageFont

from tqdm import tqdm

container = av.open(

"out.mp4",

mode="w",

format="mp4",

options={"movflags": "frag_keyframe+empty_moov"},

)

video_stream = container.add_stream(

"libx264",

width=1024,

height=1024 + 512,

pix_fmt="yuv420p",

framerate=24,

)

font = ImageFont.truetype("/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans.ttf", 24)

loss_list = []

v_param = th.nn.Parameter(v.clone())

opt = th.optim.SGD([v_param], lr=800.0)

tensor = []

# A simple hook to save the gradient

def save_tensor(x: th.Tensor):

tensor.append(x)

def conv_img(x: th.Tensor) -> th.Tensor:

with th.no_grad():

x = (x * 255).type(th.long).clamp(0, 255).cpu()

if len(x.shape) == 4:

x = x[0, :, :, :]

return x.type(th.uint8).transpose(0, 2).transpose(0, 1)

def conv_img_viridis(x: th.Tensor) -> Image:

import numpy as np

import seaborn as sns

with th.no_grad():

assert x.ndim == 2

colored = (

sns.blend_palette(["#8c179a", "#64c5c2", "#fef46a"], 6, as_cmap=True)(

x.cpu().numpy().squeeze()

)[..., :-1]

* 255.0

).astype(np.uint8)

return Image.fromarray(colored)

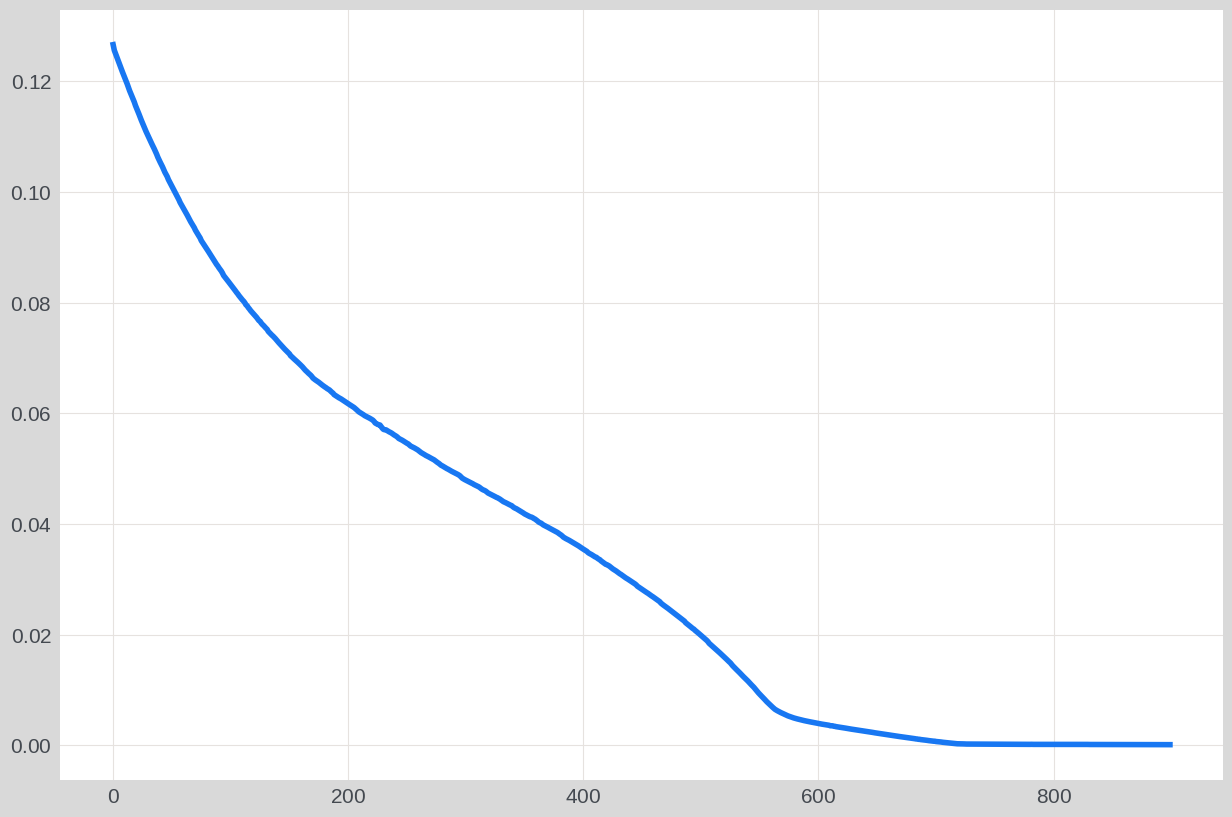

for iter in tqdm(range(900)):

tensor.clear()

index_img = drtk.rasterize(v_param, vi, width=512, height=512)

depth_img, bary_img = drtk.render(v_param, vi, index_img)

image = (index_img != -1)[:, None] * ci[index_img].permute(0, 3, 1, 2)

# Make `image` differentiable

image_differentiable = drtk.edge_grad_estimator(

v_param, vi, bary_img, image, index_img, v_pix_img_hook=save_tensor

)

# Compute loss and backpropagate

l2_error = thf.mse_loss(image_differentiable, image_target, reduction="none")

l2_loss = l2_error.mean()

l2_loss.backward()

loss_list.append(l2_loss.item())

opt.step()

opt.zero_grad()

if iter % 8 == 0:

im = conv_img(image)

im[:129, :129] = 255

im[:128, :128] = conv_img(thf.avg_pool2d(image_target, 4))

error = conv_img(thf.interpolate(l2_error, scale_factor=1.0))

grad = tensor[0]

grad = grad * 400000.0

gimx = th.as_tensor(np.asarray(conv_img_viridis(grad[0, 0] * 0.5 + 0.5)))

gimy = th.as_tensor(np.asarray(conv_img_viridis(grad[0, 1] * 0.5 + 0.5)))

gimz = th.as_tensor(np.asarray(conv_img_viridis(grad[0, 2] * 0.5 + 0.5)))

im[-1:] = 255

error[-1:] = 255

im1 = th.cat([im, error, th.zeros_like(im)], dim=0)

im2 = th.cat(

[gimx.expand(-1, -1, 3), gimy.expand(-1, -1, 3), gimz.expand(-1, -1, 3)],

dim=0,

)

im = th.cat([im1.expand(-1, -1, 3), im2], dim=1)

im = Image.fromarray(im.cpu().numpy())

draw = ImageDraw.Draw(im)

draw.text((0, 128 - 20), " Target", (255, 255, 255), font=font)

draw.text((0, 512 - 25), " Render", (255, 255, 255), font=font)

draw.text((0, 512 + 512 - 25), " Error", (255, 255, 255), font=font)

draw.text((512, 512 - 25), " grad_x", (255, 255, 255), font=font)

draw.text((512, 512 + 512 - 25), " grad_y", (255, 255, 255), font=font)

draw.text((512, 512 + 512 + 512 - 25), " grad_z", (255, 255, 255), font=font)

im = np.asarray(im)

container.mux(

video_stream.encode(av.VideoFrame.from_ndarray(im, format="rgb24"))

)

for packet in video_stream.encode():

container.mux(packet)

container.close()

plt.plot(loss_list)

plt.show()

IPython.display.Video("out.mp4", embed=True, width=512 * 1.5, height=512 * 2)

100%|██████████| 900/900 [00:32<00:00, 27.88it/s]

[5]:

This concludes the “Optimizing Intersecting Geometry” tutorial.